Conceiving of a vision may be akin to seeing it, UConn researchers report in a new paper published in Psychological Science. Their findings add support to a major theory of how our brains remember and consider ideas.

Philosophers, psychologists, linguists and other neuroscientists have long wondered how exactly we conceive of things we have experienced before. For sensed experiences—seeing a sunset, hearing a violin, tasting a brownie—brain researchers suspect that thinking about something is a little bit like experiencing it. To think about a sunset, for example, part of the visual processing area of the brain lights up. But other researchers say no, the act of considering something depends on other parts of the brain. The visual area lighting up is just a side effect, according to this hypothesis.

Distinguishing between cause and effect in brain regions can be difficult. Across a series of studies, UConn cognitive scientist Eiling Yee and graduate student Charles Davis, along with other colleagues, decided to see if they could show that thinking about a visually-experienced thing requires, or at least shares resources, with the visual system in the brain. If conceiving of a visually experienced thing requires help from the brain’s visual system, they thought, then busying the visual system with another task should make it harder to remember visual things.

“Thinking about a sunset with your eyes closed is different than thinking about a sunset when you’re scanning a crowded refrigerator,” looking for something to eat, Yee says. But is it because you need to use the visual parts of your brain both to consider a sunset and find that food item? There’s less distraction with your eyes closed. Is it easier to think about the sunset because of that?

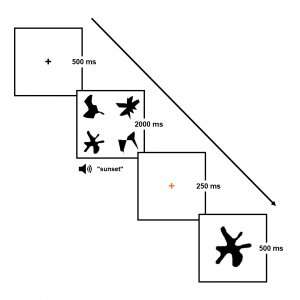

Davis, Yee, and colleagues from UConn and the Basque Center on Cognition, Brain and Language designed an experiment to find out. They had a group of undergraduates consider and remember a set of nonsense, randomly shaped blobs. While the shapes were still in front of the students, the researchers played a word; to make sure the students paid attention, they had to judge whether the word was an animal. Some of the words on the list were primarily experienced visually, for example “sunset.” Other words were not as visual, such as “volume.”

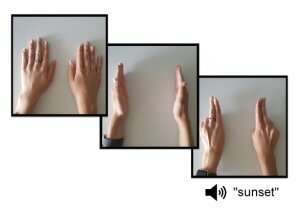

After the word test, the original shapes were removed and a single shape was shown. The student was then asked whether this shape was in the original set (see the picture for an example set of the shapes). The word judgement/shape memory task was then repeated for 240 words. Another group of undergrads heard these same words and made the same judgment on them, but instead of a visual task, they performed a manual task—a series of hand motions on a table.

The control group for the experiment was read the exact same group of words, but did not have to consider and remember shapes, nor do the manual knob-twisting.

Not surprisingly, both tasks interfered with the students’ word judgments. After all, doing two things at once is hard. But what was so interesting to the researchers was that the visual shape task interfered with the visual words (e.g., “sunset”) more than the non-visual words (“volume”). And the manual task interfered more with the non-visual words.

“What was so cool is that the two different interference tasks showed that whether a word was more affected by the visual or manual task depended on whether the thing that the word referred to was usually experienced more visually or more manually,” Yee says.

Specifically, under visual interference, people had more difficulty with visually experienced words than with manually experienced words (e.g., volume), but the opposite was true under manual interference.

But wait—isn’t volume a visual word? It has to do with the expanse of a space, right?

Not to psychology undergraduates, according to Davis and Yee, who had the words rated for visual experience by another group of UConn psychology students who did not participate in the experiment. Psychology undergraduates seemed to associate volume more with manual action (e.g., turning a volume knob, or pressing a button to silence their phone.) But that’s the fascinating thing about this experiment, Yee says.

“It’s all about your experience,” and how your brain is used to considering concepts. “For physics majors, volume might very well equal space,” she says.

Yee and Davis hope eventually to be able to explain exactly how our shared and distinct experiences come to form our knowledge about the world. They want to pursue this research further in the future by looking more explicitly at the different parts of the brain’s perceptual system. Eventually, they hope to be able to explain which parts of the perceptual system are necessary for conceiving of things. For now, they’re pointing out that their findings have a surprising implication: when you’re looking for something, having to scan through unrelated things could actually interfere with your ability to think about the very thing you are searching for.

by Kim Krieger, University of Connecticut