The human brain is a highly advanced information processor composed of more than 86 billion neurons. Humans are adept at recognizing patterns from complex networks, such as languages, without any formal instruction. Previously, cognitive scientists tried to explain this ability by depicting the brain as a highly optimized computer, but there is now discussion among neuroscientists that this model might not accurately reflect how the brain works.

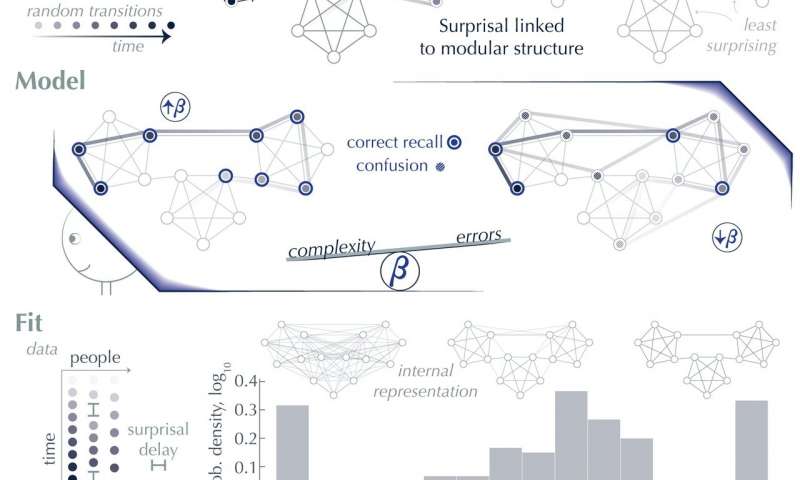

Now, Penn researchers have developed an different model for how the brain interprets patterns from complex networks. Published in Nature Communications, this new model shows that the ability to detect patterns stems in part from the brain’s goal to represent things in the simplest way possible. Their model depicts the brain as constantly balancing accuracy with simplicity when making decisions. The work was conducted by physics Ph.D. student Christopher Lynn, neuroscience Ph.D. student Ari Kahn, and professor Danielle Bassett.

This new model is built upon the idea that people make mistakes while trying to make sense of patterns, and these errors are essential to get a glimpse of the bigger picture. “If you look at a pointillist painting up close, you can correctly identify every dot. If you step back 20 feet, the details get fuzzy, but you’ll gain a better sense of the overall structure,” says Lynn.

To test their hypothesis, the researchers ran a set of experiments similar to a previous study by Kahn. That study found that when participants were shown repeating elements in a sequence, such as A-B-C-B, etc., they were automatically sensitive to certain patterns without being explicitly aware that the patterns existed. “If you experience a sequence of information, such as listening to speech, you can pick up on certain statistics between elements without being aware of what those statistics are,” says Kahn.

To understand how the brain automatically understands such complex associations within sequences, 360 study participants were shown a computer screen with five gray squares corresponding to five keys on a keyboard. As two of the five squares changed from gray to red, the participants had to strike the computer keys that corresponded to the changing squares. For the participants, the pattern of color-changing squares was random, but the sequences were actually generated using two kinds of networks.

The researchers found that the structure of the network impacted how quickly the participants could respond to the stimuli, an indication of their expectations of the underlying patterns. Responses were quicker when participants were shown sequences that were generated using a modular network compared to sequences coming from a lattice network.

While these two types of networks look different to the human eye at a large scale, they are actually statistically identical to one another at small scales. There are the same number of connections between the nodes and edges, even though the overall shape is different. “A computer would not care about this difference in large-scale structure, but it’s being picked up by the brain. Subjects could better understand the modular network’s underlying structure and anticipate the upcoming image,” says Lynn.

Using tools from information theory and reinforcement learning, the researchers were able to use this data to implement a metric of complexity called entropy. “Being very random is the least complex thing you could do, whereas if you were learning the sequence very precisely, that’s the most complex thing you can do. The balance between errors and complexity, or negative entropy, gives rise to the predictions that the model gives,” says Lynn.

Their resulting model of how the brain processes information depicts the brain as balancing two opposing pressures: complexity versus accuracy. “You can be very complex and learn well, but then you are working really hard to learn patterns,” says Lynn. “Or, you have a lower complexity process, which is easier, but you are not going to learn the patterns as well.”

With their new model, the researchers were also able to quantify this balance using a parameter beta. If beta is zero, the brain makes a lot of errors but minimizes complexity. If beta is high, then the brain is taking precautions to avoid making errors. “All beta does is tune between which is dominating,” says Lynn. In this study, 20% of the participants had a small beta, 10% had high beta values, and the remaining 70% were somewhere in between. “You do see this wide spread of beta values across people,” he says.

Kahn says that this idea of balancing forces wasn’t surprising, given the huge amount of information the brain has to process under a limited amount of resources and without spending too much time on simple decisions. “The brain is already using up a huge amount of metabolic costs, so you really want to maximize what you are getting out,” he says. “If you think about something as basic as attention, there is an inherent trade off in maximizing accuracy versus everything else you are ignoring.”

And what about the role of making mistakes? Their model provides support for the idea that the human brain isn’t an optimal learning machine but rather that making mistakes, and learning from them, plays a huge role in behavior and cognition. It seems that being able to look at complex systems more broadly, like stepping away from a pointillist painting, gives the brain a better idea of overall relationships.

“Understanding structure, or how these elements relate to one another, can emerge from an imperfect encoding of the information. If someone were perfectly able to encode all of the incoming information, they wouldn’t necessarily understand the same kind of grouping of experiences that they do if there’s a little bit of fuzziness to it,” says Kahn.

“The coolest thing is that errors in how people are learning and perceiving the world are influencing our ability to learn structures. So we are very much divorced from how a computer would act,” says Lynn.

The researchers are now interested in what makes the modular network easier for the brain to interpret and are also conducting functional MRI studies to understand where in the brain these network associations are being formed. They are also curious as to whether people’s balance of complexity and accuracy is fluid, whether people can change on their own or if they are “set,” and also hope to do experiments using language inputs sometime in the future.

“After better understanding how healthy adult humans build these network models of our world, we are excited to turn to the study of psychiatric conditions like schizophrenia in which patients build inaccurate or otherwise altered models of their worlds,” says Bassett. “Our initial work paves the way for new efforts in the emerging field of computational psychiatry.”

University of Pennsylvania