University of Texas at Dallas researchers have developed smartphone-based apps that solve the biggest problems for people with hearing loss: filtering out background noise and improving speech perception.

One of the most recent apps, detailed in a study published online July 27 in the Journal of the Acoustical Society of America, uses artificial intelligence to remove extraneous babble, street background noise and other sounds via a smartphone and earbuds as well as devices using wired or wireless connection. The article is one of 67 studies that researchers have presented about their work for people with hearing loss in academic journals and at conferences since 2014.

The latest work, funded by a five-year, $1.86 million grant (5R01DC015430-05) from the National Institute on Deafness and other Communication Disorders of the National Institutes of Health (NIH), is part of an effort to develop an open-source research platform for speech processing and hearing improvement. The NIH also provided a two-year, $522,000 grant (5R56DC014020-02) in 2015 to use smartphone technology to improve hearing devices.

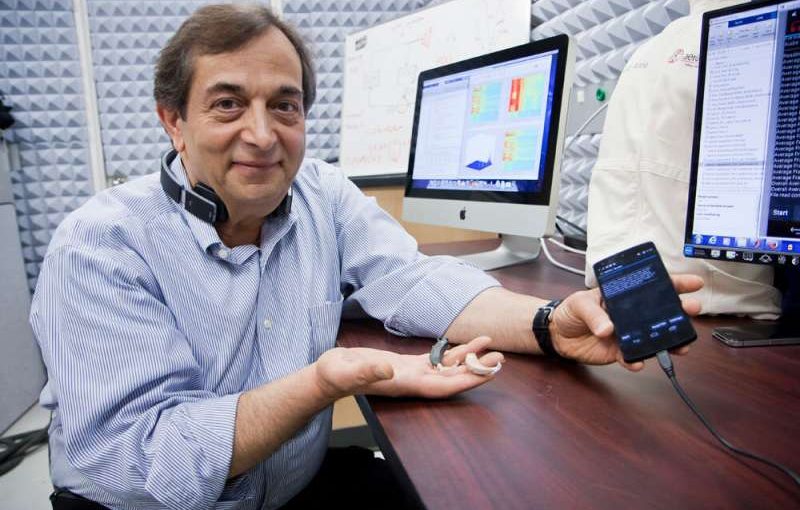

Dr. Issa Panahi, professor of electrical and computer engineering in the Erik Jonsson School of Engineering and Computer Science and principal investigator of the projects, said the apps run in real time so the signal is in sync with the speaker’s lip movements. The technology can locate and display the direction of speech source, suppress the background noise and improve speech quality and perception.

“If you talk to me in a noisy environment, I can still recognize your words well, and the speech is not distorted when our smartphone-based apps are used,” Panahi said. “That is what a person with hearing loss needs.”

Worldwide about 466 million people, including 34 million children, experience disabling hearing loss, according to the World Health Organization. About 15% of American adults report some trouble hearing, according to the NIH.

“In our research we strive to develop affordable platforms and assistive tools that would help people with hearing disorders and improve their quality of life,” Panahi said.

Preliminary tests of the apps conducted at the UT Dallas Callier Center for Communication Disorders have shown hearing improvement with 22% benefit in people with impaired hearing and 29% benefit in people without hearing loss, said Dr. Linda Thibodeau, co-principal investigator on the project and professor of speech, language, and hearing in the School of Behavioral and Brain Sciences.

“Persons with hearing loss typically report difficulty hearing speech in noisy environments despite sophisticated noise reduction circuitry in personal assistive technology,” Thibodeau said. “Some available solutions that provide significant benefit involve placing a remote microphone close to the talker to transmit the signal directly to the listener. However, these remote microphone systems can be very expensive, and sometimes awkward to use in practice.”

The researchers developed noise-filtering and speech-enhancement apps that use only the built-in microphones of a smartphone. The apps, which have been tested on both Android and iOS-based smartphones, also can be used with a hearing-assist device. The technology indicates where the noise is coming from so users can change the phone’s orientation to get better sound quality.

“If you slide the button provided by the app on the smartphone touch-screen panel all the way to maximum, it suppresses all noises so you cannot hear anything, similar to what a noise-canceling headphone does. However, the user can vary and adjust the button so that the background noise is suppressed and speech becomes clear to the user’s hearing level of comfort in different and varying noisy environments. We have developed the technology to meet different situations to help the user as much as possible,” said Panahi, the founding director of the Statistical Signal Processing Research Laboratory and the UT Acoustic Laboratory.

Many of the new apps are based on novel deep neural-network and machine-learning techniques, which use large amounts of prerecorded real-life data. To develop the technology, the researchers first created massive databases of different clean speech signals, background noise signals and noisy speech signals that a person might encounter in daily life. These data are used to train the software and produce appropriate models. The models are then used by smartphone apps to produce clear speech signals in noisy environments.

The researchers also have published studies about a novel app that detects alert signals, such as emergency sirens and home alarms, and avoids suppression of such signals as background noise for people with hearing loss.

The developed methods, published papers, algorithms, codes, user’s guides, technical documentation and audio/video demos are available on the team’s research lab website.

University of Texas at Dallas