When humans perform movements and actions in their surrounding environment, they typically feel that they own and control different parts of their body. Past theoretical studies have suggested that the sense of body ownership emerges from the integration of coherent multisensory signals in the brain. The sense of body agency, on the other hand, is thought to emerge from a coherence between predicted and perceived outcomes of a person’s actions.

Past neuroscience research has found that both body ownership and body agency can be ‘adjusted’ in interesting ways, simply by presenting people with conflicting sensory stimuli. While the effects of these sensory illusions have been widely documented, the possible impact of body movement generation on these two perceptions is still poorly understood.

Researchers at ETH Zurich’s Rehabilitation Engineering Laboratory, the Laboratory of Cognitive Neuroscience at EPFL and the MySpace Lab at CHUV have recently carried out a study aimed at investigating the contribution of the interaction force between an agent and his surrounding environment to the agent’s sense of hand ownership (SO) and sense of hand agency (SA). Their paper, published in Scientific Reports, outlines a new illusion through which SO and SA can become associated.

“The initial motivation for this study was that we observed a gap in existing literature about the relationship between two important components of subjective body experience, namely the sense of hand ownership (SO, ‘this is my hand’) and the sense of hand agency (SA, “I am controlling this hand’) and features of human/environment interactions,” Michel Akselrod, one of the researchers who carried out the study, told Medical Xpress. “We wanted to contribute to this topic by combining the expertise of three labs: The Rehabilitation Engineering Laboratory, expert in robotics and human/robot interactions (ETHZ, Switzerland), and the Laboratory of Cognitive Neuroscience (EPFL, Switzerland) and the MySpace Lab (CHUV, Switzerland), both experts in brain-body interactions and subjective body experience.”

In the past, several other teams of researchers investigated the relationship between SO and SA. The study by Akselrod and his colleagues, however, introduces a new perspective that could help to further understand which low-level sensorimotor characteristics contribute to subjective hand experiences and how they might contribute to these experiences.

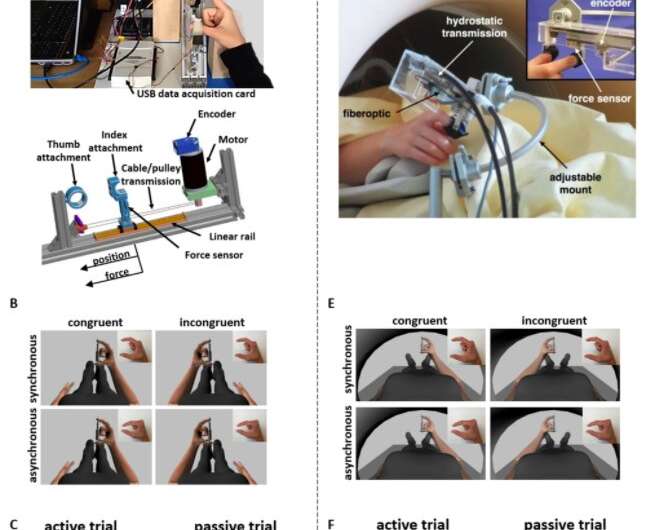

To conduct their experiments, the researchers developed a device that combines robotics and virtual reality (VR). Using this device, they were able to create the illusion that human agents ‘owned’ and ‘controlled’ a hand that was not actually their own, but that was instead presented to them in a virtual environment via a VR headset.

“This type of bodily illusion is often used in research to manipulate and study subjective hand experience, and the most famous one is the ‘rubber hand illusion,'” Akselrod explained. “By combining robotics and VR, we gained the ability to measure motor properties of movements, like the interaction force between the user and the device, and to control the visual feedback in unprecedented ways.”

In their experiments, Akselrod and his colleagues gathered a series of interesting findings. Most notably, they observed that the interaction force between human agents and their surrounding environment parametrically modulates SA, but not SO. In addition, they were able to show that and agent’s SO and SA interact beyond their common multisensory characteristics.

These results could have important implications for several fields that explore agent/environment interactions and subjective experiences. These include areas of robotics, neuro-prosthetics and physiotherapy.

“In the future, our work could help design better devices that will improve the feeling of control and acceptance from the users, for example amputees using prosthetic arms,” Akselrod added. “Continuing this line of research, we are currently interested in which brain regions are involved in SO and SA, and how they might interact with other brain regions processing low-level sensorimotor features of agent/environment interactions to explain our behavioral results.”

Ingrid Fadelli , Medical Xpress